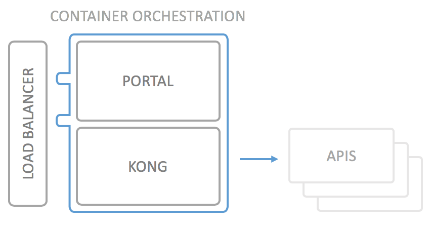

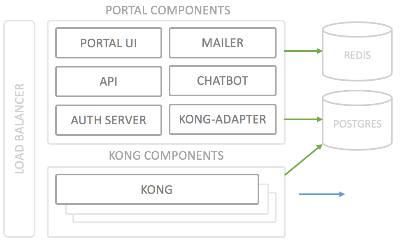

Deployment Architecture

The API Portal relies on proven technology to manage traffic: The actual portal either sits behind a HAproxy, or leverages standard Ingress Controllers (on Kubernetes) and the actual API traffic is proxied by the excellent API Gateway Kong by Mashape.

The portal components are implemented as lightweight as possible, using node.js.

When deploying on a single docker Host, the "Kickstarter" can create suitable

docker-compose.yml files to include a HAproxy. For Kubernetes, the Helm

chart utilizes existing Ingress Controllers.